AMD today held a roughly two-hour conference in San Francisco during which CEO Lisa Su and other executives introduced a new generation of server processors, the next model in the Instinct MI300 Accelerator family, and new data-center networking devices.

As CEO Su told the audience the live and online audience, AMD is committed to offering end-to-end AI infrastructure products and solutions in an open, partner-dependent ecosystem.

Su further explained that AMD’s new AI strategy has 4 main goals:

- Become the leader in end-to-end AI

- Create an open AI software platform of libraries and models

- Co-innovate with partners including cloud providers, OEMs and software creators

- Offer all the pieces needed for a total AI solution, all the way from chips to racks to clusters and even entire data centers.

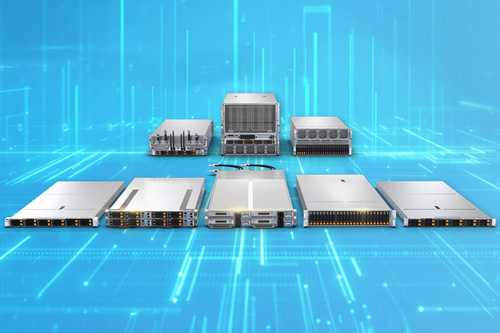

And here’s a look at the new data-center hardware AMD announced today.

5th Gen AMD EPYC CPUs

The EPYC line, originally launched in 2017, has become a big success for AMD. As Su told the event audience, there are now more than 950 EPYC instances at the largest cloud providers; also, AMD hardware partners now offer EPYC processors on more than 350 platforms. Market share is up, too: Nearly one in three servers worldwide (34%) now run on EPYC, Su said.

The new EPYC processors, formerly codenamed Turin and now known as the AMD EPYC 9005 Series, are now available for data center, AI and cloud customers.

The new CPUs also have a new core architecture known as Zen5. AMD says Zen5 outperforms the previous Zen4 generation by 17% on enterprise instructions-per-clock and up to 37% on AI and HPC workloads.

The new 5th Gen line has over 25 SKUs, and core count ranges widely, from as few as 8 to as many as 192. For example, the new AMD EPYC 9575F is a 65-core, 5GHz CPU designed specifically for GPU-powered AI solutions.

AMD Instinct MI325X Accelerator

About a year ago, AMD introduced the Instinct MI300 Accelerators, and since then the company committed itself to introducing new models on a yearly cadence. Sure enough, today Lisa Su introduced the newest model, the AMD Instinct MI325X Accelerator.

Designed for Generative AI performance and built on the AMD CDNA3 architecture, the new accelerator offers up to 256GB of HBM3E memory, and bandwidth up to 6TB/sec.

Shipments of the MI325X are set to begin in this year’s fourth quarter. Partner systems with the new AMD accelerator are expected to start shipping in next year’s first quarter.

Su also mentioned the next model in the line, the AMD Instinct MI350, which will offer up to 288GB of HBM3E memory. It’s set to be formally announced in the second half of next year.

Networking Devices

Forrest Norrod, AMD’s head of data-center solutions, introduced two networking devices designed for data centers running AI workloads.

The AMD Pensando Salina DPU is designed for front-end connectivity. It supports thruput of up to 400 Gbps.

The AMD Pensando Pollara 400, designed for back-end networks connecting multiple GPUs, is the industry’s first Ultra-Ethernet Consortium-ready AI NIC.

Both parts are sampling with customers now, and AMD expects to start general shipments in next year’s first half.

Both devices are needed, Norrod said, because AI dramatically raises networking demands. He cited studies showing that connectivity currently accounts for 40% to 75% of the time needed to run certain AI training and inference models.

Supermicro Support

Supermicro is among the AMD partners already ready with systems based on the new AMD processors and accelerator.

Wasting no time, Supermicro today announced new H14 series servers, including both Hyper and FlexTwin systems, that support the 5th gen AMD 9005 EPYC processors and AMD Instinct MI325X Accelerators.

The Supermicro H14 family includes three systems for AI training and inference workloads. Supermicro says the systems can also accommodate the higher thermal requirements of the new AMD EPYC processors, which are rated at up to 500W. Liquid cooling is an option, too.

Do More:

- Learn more about the 5th Gen AMD EPYC processors

- Explore the AMD Instinct Accelerators

- Meet the new Supermicro H14 family

- Watch a video replay of AMD’s Advancing AI event