As AI powered by GPUs transforms computing, conventional DDR memory can’t keep up.

The solution? High-bandwidth memory (HBM).

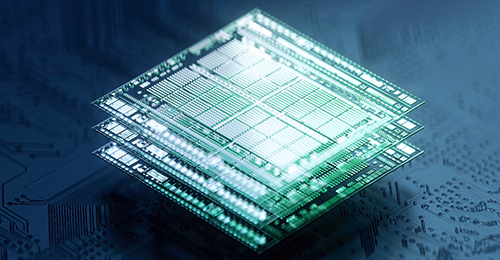

HBM is memory chip technology that essentially shortens the information commute. It does this using ultra-wide communication lanes.

An HBM device contains vertically stacked memory chips. They’re interconnected by microscopic wires known as through-silicon vias, or TSVs for short.

HBM also provides more bandwidth per watt. And, with a smaller footprint, the technology can also save valuable data-center space.

Here’s how: A single HBM stack can contain up to eight DRAM modules, with each module connected by two channels. This makes an HBM implementation of just four chips roughly equivalent to 30 DDR modules, and in a fraction of the space.

All this makes HBM ideal for workloads that utilize AI and machine learning, HPC, advanced graphics and data analytics.

Latest & Greatest

The latest iteration, HBM3, was introduced in 2022, and it’s now finding wide application in market-ready systems.

Compared with the previous version, HBM3 adds several enhancements:

- Higher bandwidth: Up to 819 GB/sec., up from HBM2’s max of 460 GB/sec.

- More memory capacity: 24GB per stack, up from HBM2’s 8GB

- Improved power efficiency: Delivering more data throughput per watt

- Reduced form factor: Thanks to a more compact design

However, it’s not all sunshine and rainbows. For one, HBM-equipped systems are more expensive than those fitted out with traditional memory solutions.

Also, HBM stacks generate considerable heat. Advanced cooling systems are often needed, adding further complexity and cost.

Compatibility is yet another challenge. Systems must be designed or adapted to HBM3’s unique interface and form factor.

In the Market

As mentioned above, HBM3 is showing up in new products. That very definitely includes both the AMD Instinct MI300A and MI300X series accelerators.

The AMD Instinct MI300A accelerator combines a CPU and GPU for running HPC/AI workloads. It offers HBM3 as the dedicated memory with a unified capacity of up to 128GB.

Similarly, the AMD Instinct MI300X is a GPU-only accelerator designed for low-latency AI processing. It contains HBM3 as the dedicated memory, but with a higher capacity of up to 192GB.

For both of these AMD Instinct MI300 accelerators, the peak theoretical memory bandwidth is a speedy 5.3TB/sec.

The AMD Instinct MI300X is also the main processor in Supermicro’s AS -8125GS-TNMR2, an H13 8U 8-GPU system. This system offers a huge 1.5TB of HBM3 memory in single-server mode, and an even huger 6.144TB at rack scale.

Are your customers running AI with fast GPUs, only to have their systems held back by conventional memory? Tell them to check out HBM.

Do More:

- Check out the Supermicro glossary: What is HBM3?

- Get tech specs (including HBM) for the AMD Instinct MI300 series accelerators

- Download the datasheet: Supermicro’s H13 8U 8-CPU system powered by AMD Instinct MI300X accelerators