Where Machine Learning is designed to reduce the need for human intervention, Deep Learning—an extension of ML—removes much of the human element altogether.

If ML were a driver-assistance feature that helped you parallel park and avoid collisions, DL would be an autonomous, self-driving car.

The human intervention we’re talking about has much to do with categorizing and labeling the data used by ML models. Producing this structured data is both time-consuming and expensive.

DL shortens the time and lowers the cost by learning from unstructured data. This elimnates much of the data pre-processing performed by humans for ML.

That’s good news for modern businesses. Market watcher IDC estimates that as much as 90% of corporate data is associated with unstructured data.

DL is particularly good at processing unstructured data. That includes information coming from the edge, the core and millions of both personal and IoT devices.

Like a brain, but digital

Deep Learning systems “think” with a neural network—multiple layers of interconnected nodes designed to mimic the way the human brain works. A DL system processes data inputs in an attempt to recognize, classify and accurately describe objects within data.

The layers of a neural network are stacked vertically. Each layer builds on the work performed by the one below it. By pushing data through each successive layer, the overall system improves its predictions and categorizations.

For instance, imagine you’ve tasked a DL system to identify pictures of junk food. The system would quickly learn—on its own—how to differentiate Pringles from Doritos.

It might do this by learning to recognize Pringles’ iconic tubular packaging. Then the system would categorize Pringles differently than the family-size sack of Doritos.

What if you fed this hypothetical DL system with more pictures of chips? Then it could begin to identify varying angles of packaging, as well as colors, logos, shapes and granular aspects of the chips themselves.

As this example illustrates, the longer a DL system operates, the more intelligent and accurate it becomes.

Things we used to do

DL tends to be deployed when it’s time to pull out the big guns. This isn’t tech you throw at a mere spam filter or recommendation engine.

Instead, it’s the tech that powers the world’s finance, biomedical advances and law enforcement. For these verticals, failure is simply not an option.

For these verticals, here are some of the ways DL operates behind the scenes:

- BioMed: DL helps healthcare staff analyze medical imaging such as X-rays and CT scans. In many cases, the technology is more accurate than well-trained physicians with decades of experience.

- Finance: For those seeking a market edge (read: everyone), DL employs powerful, algorithmic-based predictive analytics. This helps modern-day robber barons manage their portfolios based on insights from data so vast, they couldn’t leverage it themselves. DL also helps financial institutions assess loans, detect fraud and manage credit.

- Law Enforcement: In the 2002 movie “Minority Report,” Tom Cruise played a police officer who could arrest people before they committed a crime. With DL, this fiction could turn into an unsettling reality. DL can be used to analyze millions of data points, then predict who is most likely to break the law. It might even give authorities an idea of where, when and how it could happen.

The future…?

Looking into a crystal ball—which these days probably uses DL—we can see a long succession of similar technologies coming. Just as ML begat DL, so too will DL beget the next form of AI—and the one after that.

The future of DL isn’t a question of if, but when. Clearly, DL will be used to advance a growing number of industries. But just when each sector will come to be ruled by our new smarty-pants robots is less clear.

Keep in mind: Even as you read this, DL systems are working tirelessly to help data scientists make AI more accurate and able to provide more useful assessments of datasets for specific outcomes. And as the science progresses, neural networks will continue to become more complex—and more like human brains.

That means the next generation of DL will likely be far more capable than the current one. Future AI systems could figure out how to reverse the aging process, map distant galaxies, even produce bespoke food based on biometric feedback from hungry diners.

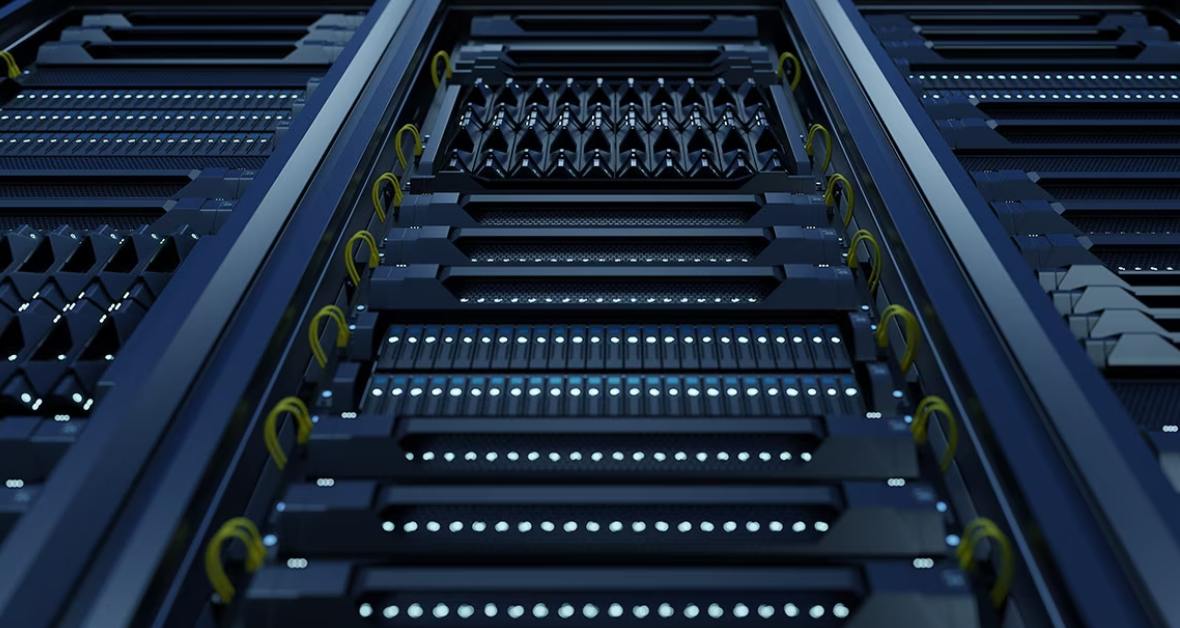

For example, the upcoming AMD Instinct MI300 accelerators promise to usher in a new era of computing capabilities. That includes the ability to handle large language models (LLMs), the key approach behind generative AI systems such as ChatGPT.

Yes, the robots are here, and they want to feed you custom Pringles. Bon appétit!

Do more:

- Read Part 1 of this Tech Explainer: What’s the difference between machine learning and deep learning