When it comes to building AI systems for your customers, a certain GPU provider with a trillion-dollar valuation isn’t the only game in town. You should also consider the dynamic duo of AMD and Supermicro, which are jointly offering high-performance AI alternatives with superior price and performance.

Supermicro’s Universal GPU systems are designed specifically for large-scale AI and high-performance computing (HPC) applications. Some of these modular designs come equipped with AMD’s Instinct MI250 Accelerator and have the option of being powered by dual AMD EPYC processors.

AMD, with a newly formed AI group led by Victor Peng, is working hard to enable AI across many environments. The company has developed an open software stack for AI, and it has also expanded its partnerships with AI software and framework suppliers that now include the PyTorch Foundation and Hugging Face.

AI accelerators

In addition, AMD’s Instinct MI300A data-center accelerator is due to ship in this year’s fourth quarter. It’s the successor to AMD’s MI200 series, based on the company’s CDNA 2 architecture and first multi-die CPU, which powers some of today’s fastest supercomputers.

The forthcoming Instinct MI300A is based on AMD’s CDNA 3 architecture for AI and HPC workloads, which uses 5nm and 6nm process tech and advanced chiplet packaging. Under the MI300A’s hood, you’ll find 24 processor cores with Zen 4 tech, as well as 128GB of HBM3 memory that’s shared by the CPU and GPU. And it supports AMD ROCm 5, a production-ready, open source HPC and AI software stack.

Earlier this month, AMD introduced another member of the series, the AMD Instinct MI300X. It replaces three Zen 4 CPU chiplets with two CDNA 3 chiplets to create a GPU-only system. Announced at AMD’s recent Data Center and AI Technology Premier event, the MI300X is optimized for large language models (LLMs) and other forms of AI.

To accommodate the demanding memory needs of generative AI workloads, the new AMD Instinct MI300X also adds 64GB of HBM3 memory, for a new total of 192GB. This means the system can run large models directly in memory, reducing the number of GPUs needed, speeding performance, and reducing the user’s total cost of ownership (TCO).

AMD also recently introduced the AMD Instinct Platform, which puts eight MI300X systems and 1.5TB of memory in a standard Open Compute Project (OCP) infrastructure. It’s designed to drop into an end user’s current IT infrastructure with only minimal changes.

All this is coming soon. The AMD MI300A started sampling with select customers earlier this quarter. The MI300X and Instinct Platform are both set to begin sampling in the third quarter. Production of the hardware products is expected to ramp in the fourth quarter.

KT’s cloud

All that may sound good in theory, but how does the AMD + Supermicro combination work in the real world of AI?

Just ask KT Cloud, a South Korea-based provider of cloud services that include infrastructure, platform and software as a service (IaaS, PaaS, SaaS). With the rise of customer interest in AI, KT Cloud set out to develop new XaaS customer offerings around AI, while also developing its own in-house AI models.

However, as KT embarked on this AI journey, the company quickly encountered three major challenges:

- The high cost of AI GPU accelerators: KT Cloud would need hundreds of thousands of new GPU servers.

- Inefficient use of GPU resources in the cloud: Few cloud providers offer GPU virtualization due to overhead. As a result, most cloud-based GPUs are visible to only 1 virtual machine, meaning they cannot be shared by multiple users.

- Difficulty using large GPU clusters: KT is training Korean-language models using literally billions of parameters, requiring more than 1,000 GPUs. But this is complex: Users would need to manually apply parallelization strategies and optimizations techniques.

The solution: KT worked with Moreh Inc., a South Korean developer of AI software, and AMD to design a novel platform architecture powered by AMD’s Instinct MI250 Accelerators and Moreh’s software.

The entire AI software stack was developed by Moreh from PyTorch and TensorFlow APIs to GPU-accelerated primitive operations. This overcomes the limitations of cloud services and large AI model training.

Users do not need to insert or modify even a single line of existing source code for the MoAI platform. They also do not need to change the method of running a PyTorch/TensorFlow program.

Did it work?

In a word, yes. To test the setup, KT developed a Korean language model with 11 billion parameters. Training was then done on two machines: one using Nvidia GPUs, the other being the AMD/Moreh cluster equipped with AMD Instinct MI250 accelerators, Supermicro Universal GPU systems, and the Moreh AI platform software.

Compared with the Nvidia system, the Moreh solution with AMD Instinct accelerators showed 116% throughput (as measured by tokens trained per second), and 2.05x higher cost-effectiveness (measured as throughput per dollar).

Other gains are expected, too. “With cost-effective AMD Instinct accelerators and a pay-as-you-go pricing model, KT Cloud expects to be able to reduce the effective price of its GPU cloud service by 70%,” says JooSung Kim, VP of KT Cloud.

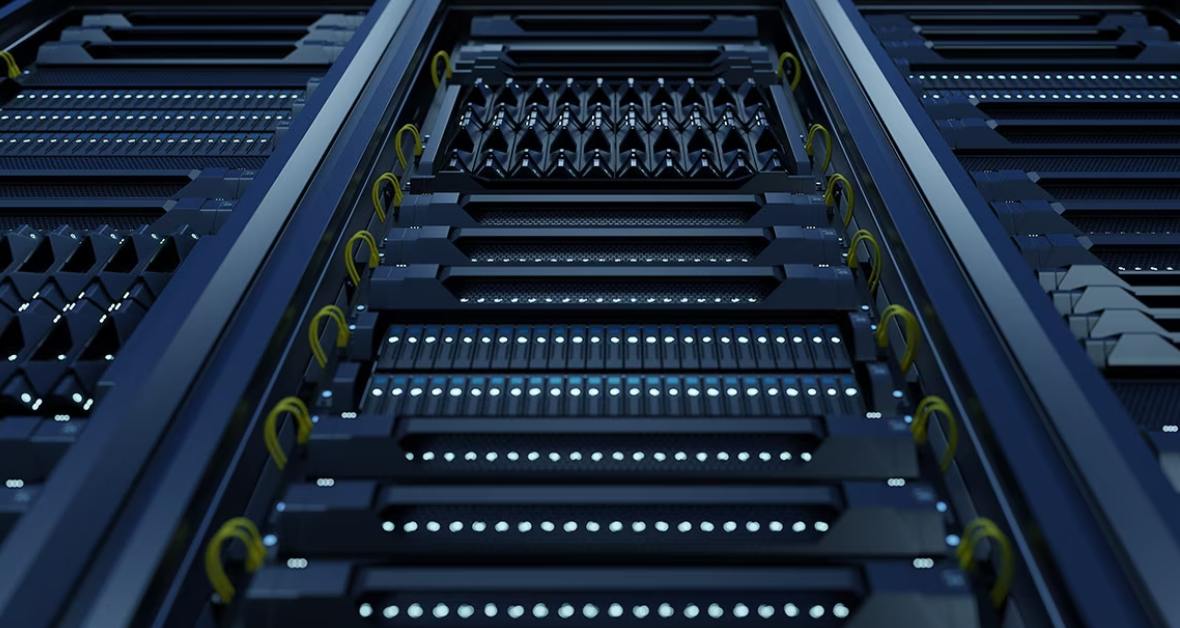

Based on this test, KT built a larger AMD/Moreh cluster of 300 nodes—with a total of 1,200 AMD MI250 GPUs—to train the next version of the Korean language model with 200 billion parameters.

It delivers a theoretical peak performance of 434.5 petaflops for fp16/bf16 (a native 16-bit format for mixed-precision training) matrix operations. That should make it one of the top-tier GPU supercomputers in the world.

Do more:

- Check out Supermicro Universal GPU systems

- Read the case study: KT Cloud set to expand AI potential with AMD Instinct accelerators

- Watch the video of AMD CEO Lisa Su’s presentation on new Instinct MI Series hardware (starts at 1:18:00)